Helping prosecutors make race-blind charging decisions using AI.

Prosecutors have nearly absolute discretion to charge or dismiss criminal cases. There is concern, however, that these high-stakes judgments may not be made fairly.

We designed an algorithm that automatically redacts race-related information from free-text case narratives, allowing prosecutors to make a race-blind decision about whether to charge each arrestee. We piloted our algorithm at the San Francisco and Yolo County District Attorney offices on thousands of incoming cases. Our analysis shows that the redaction algorithm is able to obscure race-related information close to the theoretical limit. Blind charging also helps prosecutors demonstrate that they have taken concrete steps to ensure fairness in the charging process, potentially improving perceptions of procedural justice for their constituents.

In 2022, legislators in California recognized the success of our pilot deployments and passed AB-2778, which required prosecutors across the state to adopt blind charging by 2025. We are now using large language models (e.g., GPT-4o) to automatically redact case records for prosecutors across the country as part of a large scale experiment evaluating its impact on charging outcomes.

Read more about our project at blindcharging.org.

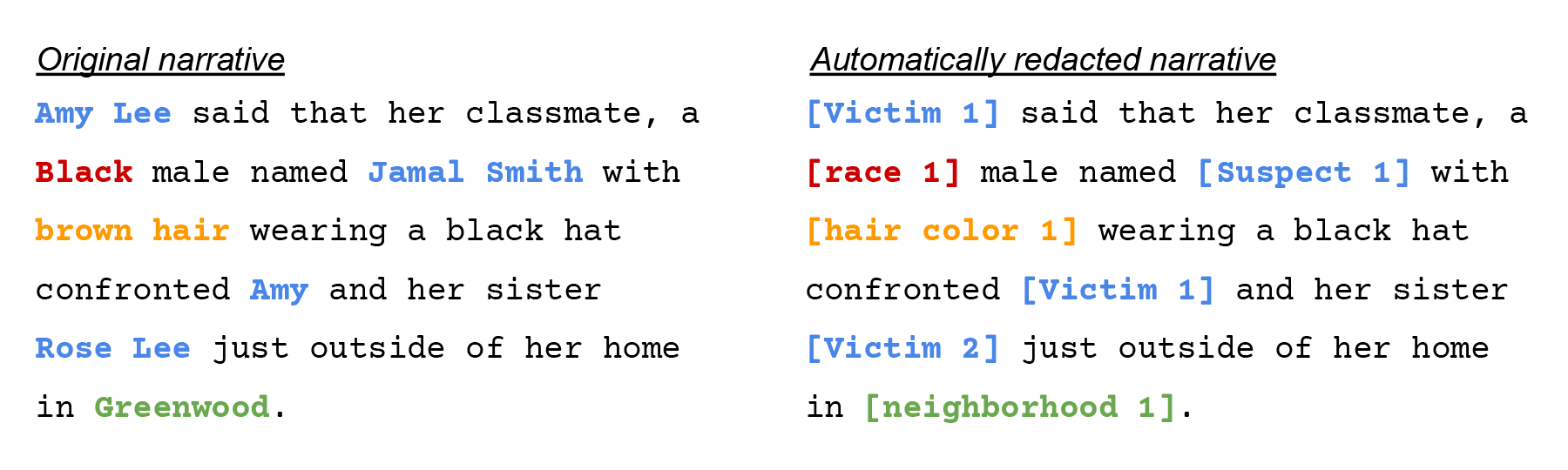

A hypothetical example of our redaction algorithm obscuring information that could be used to infer an individual’s race.

A hypothetical example of our redaction algorithm obscuring information that could be used to infer an individual’s race.